ASPRS Standards & Professional Specifications

What is LiDAR Accuracy?

LiDAR accuracy describes how close measured values are to the true position of points on the Earth’s surface. Unlike precision (which measures repeatability), accuracy measures correctness against a known reference.

Absolute vs Relative Accuracy

LiDAR accuracy specifications include two distinct measurements that serve different purposes.

Absolute Accuracy

Absolute accuracy compares LiDAR measurements to independently surveyed ground control points. This tells you how well the data represents real-world coordinates.

It is assessed using Root Mean Square Error (RMSE) calculated against checkpoints. For vertical accuracy, checkpoints are typically placed on flat, open terrain (bare earth) to minimize interpolation errors.

The accuracy achieved on such terrain is called fundamental vertical accuracy.

Relative Accuracy

Relative accuracy measures internal consistency within the dataset without external reference points. It indicates how well individual points align with neighboring points.

Two types are commonly assessed:

- Within-swath accuracy: Consistency of points collected in a single flight line

- Swath-to-swath accuracy: Alignment between adjacent, overlapping flight lines

Good relative accuracy is essential for slope analysis, drainage modeling, and contour generation.

ASPRS Accuracy Standards

The American Society for Photogrammetry and Remote Sensing (ASPRS) defines standardized accuracy classes for LiDAR data. These are commonly referenced in project specifications.

Higher quality levels require more ground control points for validation and typically cost more to acquire.

Factors Affecting LiDAR Accuracy

Multiple factors influence the final accuracy of LiDAR-derived products. Understanding these helps set realistic expectations for your data.

01

System Calibration

LiDAR systems require precise calibration of three integrated components: the laser scanner, Inertial Navigation System (INS), and GNSS receiver. Calibration errors propagate through the entire dataset.

02

Flight Parameters

Flying height, speed, and scan angle all affect accuracy. Lower altitude improves point density and reduces ranging errors. Slower speed increases overlap. Narrower scan angles reduce edge distortion.

03

Point Density

Higher point density improves accuracy through averaging and better canopy penetration. However, beyond 8-10 points/m², additional density provides diminishing returns for accuracy.

04

Ground Classification Quality

For DTMs, the accuracy of ground point classification directly impacts the final product. Challenging terrain includes steep slopes, dense forests, urban areas, and coastal zones.

05

Terrain Complexity

Accuracy specifications are typically reported for “open terrain” conditions. In complex environments, actual accuracy may be lower due to interpolation between sparse ground points.

Stop Tuning Parameters Manually

AI-Powered Classification That Maintains Accuracy

How to Verify LiDAR Accuracy

Before using LiDAR data for critical applications, verify the accuracy meets your requirements.

How Processing Affects Accuracy

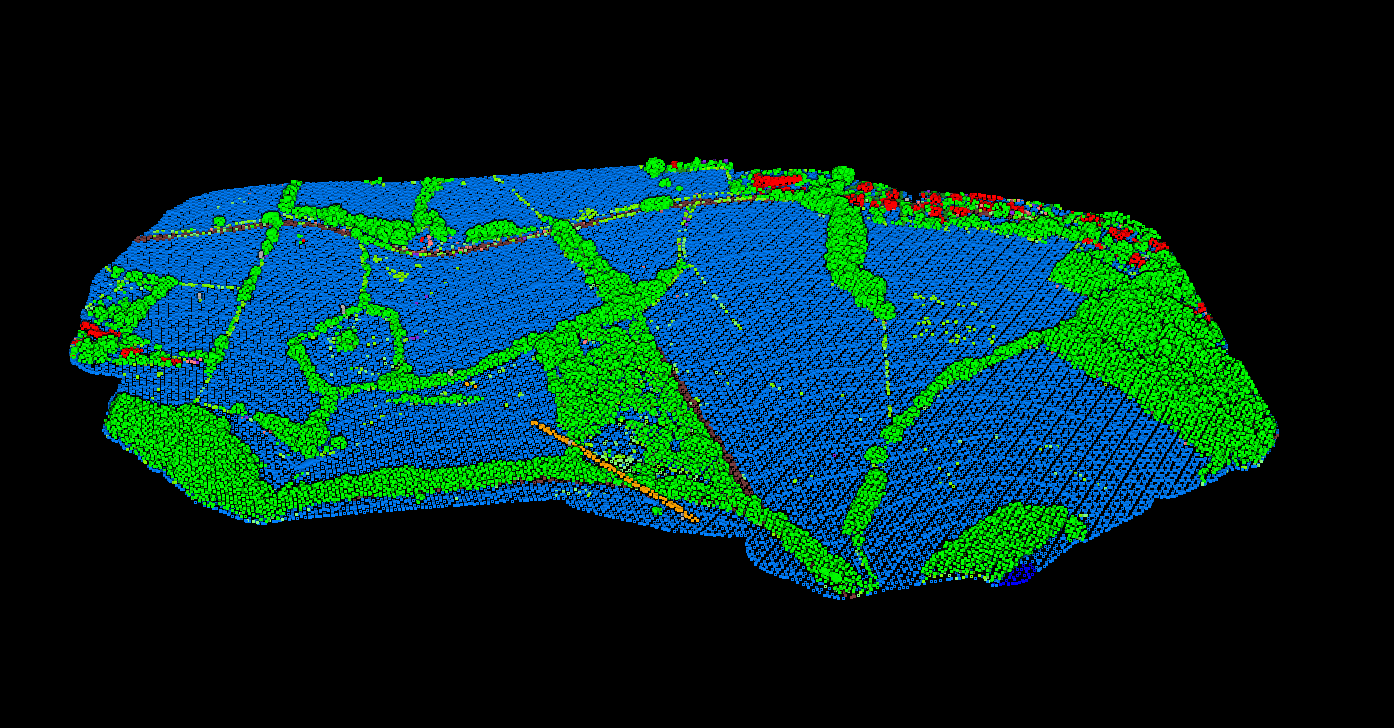

Raw LiDAR point clouds require classification before generating terrain models. The processing workflow directly impacts the accuracy of derived products.

AI-powered point cloud classification automatically separates ground, vegetation, and structures while preserving data accuracy.

01

Noise Removal

Eliminating outlier points (birds, atmospheric returns)

02

Ground Classification

Separating terrain from above-ground features

03

Surface Interpolation

Generating continuous elevation models from discrete points

Lidarvisor uses AI-powered classification that handles these steps automatically while maintaining your data’s accuracy. Upload your point cloud, and the system classifies points into ASPRS-standard classes without manual parameter tuning, eliminating the risk of human error in the processing workflow.

Accuracy vs Precision: Key Differences

Low Precision

Low Accuracy

High Precision

Low Accuracy

Low Precision

High Accuracy

High Precision

High Accuracy

Precision

Repeatability of measurements. High precision means repeated scans give consistent results, even if those results are wrong.

Accuracy

Correctness relative to true values. High accuracy means measurements are close to reality.

A LiDAR system can be highly precise but inaccurate if it has a systematic calibration error. Proper calibration is essential to achieve both.

Common Accuracy Misconceptions

Beyond 8-10 points/m², additional density primarily improves detail resolution, not accuracy. System calibration and ground control quality matter more for overall accuracy.

Accuracy varies by terrain type. The reported RMSE applies to open terrain; vegetated or urban areas typically have lower accuracy due to classification challenges and interpolation between sparse ground points.

Specifying tighter accuracy than needed increases acquisition and processing costs without providing meaningful benefit for your application. Match specifications to your actual requirements.

Summary

LiDAR accuracy depends on system calibration, acquisition parameters, point density, classification quality, and terrain conditions. Understanding these factors helps you select appropriate data for your application and set realistic expectations for derived products.

For most mapping and modeling applications, ASPRS QL1 or QL2 data (10 cm vertical accuracy) provides sufficient quality. Higher accuracy specifications are reserved for detailed engineering and critical infrastructure projects.